1-click AWS Deployment 1-click Azure Deployment

Overview

It is a NoSQL database, with documents and has got a managed memory with data operations, fast query indexers and another query machine for asking questions on SQL queries. It is very synchronized to the CouchBase server. The server of the Couchbase is for large scale websites with very low latency management of data. Few requirements for Couchbase Server are as follows:

- Too Available

- Strong Query

- High Scale

- Flexibility

- Comfortable Administration

- Work

Too Available :

It is very available because all the operations are done online without giving any obstacle to the ongoing process. You never have to go offline for updating of the system’s software or any refreshing work. Modifying of nodes are operations that do not need turning your system offline. Thus, high availability is of great use for the Couchbase users.

Strong Query :

Querying of the server is best done by the N1QL. JOIN operations, helping a high range of data models are being supported by Couchbase. N1QL has a beautiful index infrastructure and extensions for handling complicated documents with complicated structures which support the developers for producing exciting applications. A large range of tools through JDBC and ODBC access N1QL.

High Scale :

Read and write about applications all done on high weighted data using internet of things is being done by the Couch server. Continuous reads and writes for sub-milliseconds refer to the low latency of the server of the Couch base. During heavy loads, people want lesser latency and faster delivery. So high speed is taken to be very significant by the Couchbase users. With high speed even during heavy load, Couchbase performance seems to stand high, hence turning it into a user-friendly system.This server is completely memory dependent and gives high throughput during reading wb=nd writing operations with very low latency. The object managed cache is another significant factor which is well used by the database.Customer requests are done directly through machine learning of Spark and Hadoop by the data scientists and analysts.

Flexibility :

Very recent developments with no downtime are being enabled by the data model of Couchbase which is very flexible. The documents of Couchbase are about JSON which represents structures and their relationships in a self-mode format.Representing the server with the code of applications and save in materials. The coders add newer objects at any time by adding newer application code storing new JSON with no sudden changes. This makes the applications grow really fast.

Comfortable Administration :

The server of Couch base is very simple to manage and organize. Automatic replication makes the management of the server very simple and easy.Even a change in the topology is also automatically done without any change to the application. The software is also updated. The clusters can be expanded with a single click which is very well managed by only one administrator.

Work :

The two basic factors of the server are sharding as well as replication. Data is distributed in the clusters. By adding RAM, disk by not pressurizing more on the developers and administrators the database is consistently growing horizontally. For best use the server maintains a very high efficiency of its own. As a result, the number of clients and input-output storage increases. As a result of the evenly distributed workloads, the hardware turns user-friendly.

Couchbase Architecture

Couchbase is the merge of two popular NOSQL technologies:

- Membase, which provides persistence, replication, sharding to the high performance memcached technology

- CouchDB, which pioneers the document oriented model based on JSON

Like other NOSQL technologies, both Membase and CouchDB are built from the ground up on a highly distributed architecture, with data shard across machines in a cluster. Built around the Memcached protocol, Membase provides an easy migration to existing Memcached users who want to add persistence, sharding and fault resilience on their familiar Memcached model. On the other hand, CouchDB provides first class support for storing JSON documents as well as a simple RESTful API to access them. Underneath, CouchDB also has a highly tuned storage engine that is optimized for both update transaction as well as query processing. Taking the best of both technologies, Membase is well-positioned in the NOSQL marketplace.

Programming model

Couchbase provides client libraries for different programming languages such as Java / .NET / PHP / Ruby / C / Python / Node.js

For read, Couchbase provides a key-based lookup mechanism where the client is expected to provide the key, and only the server hosting the data (with that key) will be contacted.

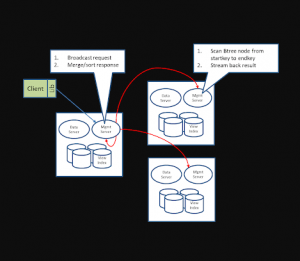

Couchbase also provides a query mechanism to retrieve data where the client provides a query (for example, range based on some secondary key) as well as the view (basically the index). The query will be broadcasted to all servers in the cluster and the result will be merged and sent back to the client.

For write, Couchbase provides a key-based update mechanism where the client sends in an updated document with the key (as doc id). When handling write request, the server will return to client’s write request as soon as the data is stored in RAM on the active server, which offers the lowest latency for write requests.

Following is the core API that Couchbase offers. (in an abstract sense)

# Get a document by key doc = get(key) # Modify a document, notice the whole document # need to be passed in set(key, doc) # Modify a document when no one has modified it # since my last read casVersion = doc.getCas() cas(key, casVersion, changedDoc) # Create a new document, with an expiration time # after which the document will be deleted addIfNotExist(key, doc, timeToLive) # Delete a document delete(key) # When the value is an integer, increment the integer increment(key) # When the value is an integer, decrement the integer decrement(key) # When the value is an opaque byte array, append more # data into existing value append(key, newData) # Query the data results = query(viewName, queryParameters)

In Couchbase, document is the unit of manipulation. Currently Couchbase doesn’t support server-side execution of custom logic. Couchbase server is basically a passive store and unlike other document oriented DB, Couchbase doesn’t support field-level modification. In case of modifying documents, client need to retrieve documents by its key, do the modification locally and then send back the whole (modified) document back to the server. This design tradeoff network bandwidth (since more data will be transferred across the network) for CPU (now CPU load shift to client).

Couchbase currently doesn’t support bulk modification based on a condition matching. Modification happens only in a per document basis. (client will save the modified document one at a time).

Transaction Model

Similar to many NOSQL databases, Couchbase’s transaction model is primitive as compared to RDBMS. Atomicity is guaranteed at a single document and transactions that span update of multiple documents are unsupported. To provide necessary isolation for concurrent access, Couchbase provides a CAS (compare and swap) mechanism which works as follows …

- When the client retrieves a document, a CAS ID (equivalent to a revision number) is attached to it.

- While the client is manipulating the retrieved document locally, another client may modify this document. When this happens, the CAS ID of the document at the server will be incremented.

- Now, when the original client submits its modification to the server, it can attach the original CAS ID in its request. The server will verify this ID with the actual ID in the server. If they differ, the document has been updated in between and the server will not apply the update.

- The original client will re-read the document (which now has a newer ID) and re-submit its modification.

Couchbase also provides a locking mechanism for clients to coordinate their access to documents. Clients can request a LOCK on the document it intends to modify, update the documents and then releases the LOCK. To prevent a deadlock situation, each LOCK grant has a timeout so it will automatically be released after a period of time.

Deployment Architecture

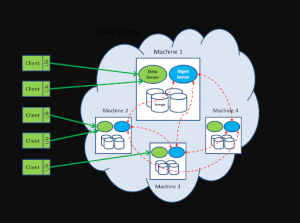

In a typical setting, a Couchbase DB resides in a server clusters involving multiple machines. Client library will connect to the appropriate servers to access the data. Each machine contains a number of daemon processes which provides data access as well as management functions.

The data server, written in C/C++, is responsible to handle get/set/delete request from client. The Management server, written in Erlang, is responsible to handle the query traffic from client, as well as manage the configuration and communicate with other member nodes in the cluster.

Virtual Buckets

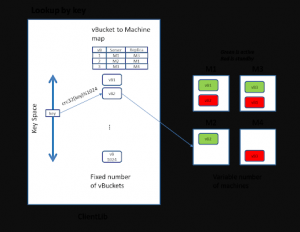

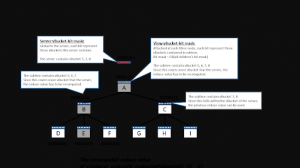

The basic unit of data storage in Couchbase DB is a JSON document (or primitive data type such as int and byte array) which is associated with a key. The overall key space is partitioned into 1024 logical storage unit called “virtual buckets” (or vBucket). vBucket are distributed across machines within the cluster via a map that is shared among servers in the cluster as well as the client library.

High availability is achieved through data replication at the vBucket level. Currently Couchbase supports one active vBucket zero or more standby replicas hosted in other machines. Curremtly the standby server are idle and not serving any client request. In future version of Couchbase, the standby replica will be able to serve read request.

Load balancing in Couchbase is achieved as follows:

- Keys are uniformly distributed based on the hash function

- When machines are added and removed in the cluster. The administrator can request a redistribution of vBucket so that data are evenly spread across physical machines.

Management Server

Management server performs the management function and co-ordinate the other nodes within the cluster. It includes the following monitoring and administration functions

Heartbeat: A watchdog process periodically communicates with all member nodes within the same cluster to provide Couchbase Server health updates.

Process monitor: This subsystem monitors execution of the local data manager, restarting failed processes as required and provide status information to the heartbeat module.

Configuration manager: Each Couchbase Server node shares a cluster-wide configuration which contains the member nodes within the cluster, a vBucket map. The configuration manager pull this config from other member nodes at bootup time.

Within a cluster, one node’s Management Server will be elected as the leader which performs the following cluster-wide management function

- Controls the distribution of vBuckets among other nodes and initiate vBucket migration

- Orchestrates the failover and update the configuration manager of member nodes

If the leader node crashes, a new leader will be elected from surviving members in the cluster.

When a machine in the cluster has crashed, the leader will detect that and notify member machines in the cluster that all vBuckets hosted in the crashed machine is dead. After getting this signal, machines hosting the corresponding vBucket replica will set the vBucket status as “active”. The vBucket/server map is updated and eventually propagated to the client lib. Notice that at this moment, the replication level of the vBucket will be reduced. Couchbase doesn’t automatically re-create new replicas which will cause data copying traffic. Administrator can issue a command to explicitly initiate a data rebalancing. The crashed machine, after reboot can rejoin the cluster. At this moment, all the data it stores previously will be completely discard and the machine will be treated as a brand new empty machine.

As more machines are put into the cluster (for scaling out), vBucket should be redistributed to achieve a load balance. This is currently triggered by an explicit command from the administrator. Once receive the “rebalance” command, the leader will compute the new provisional map which has the balanced distribution of vBuckets and send this provisional map to all members of the cluster.

To compute the vBucket map and migration plan, the leader attempts the following objectives:

- Evenly distribute the number of active vBuckets and replica vBuckets among member nodes.

- Place the active copy and each replicas in physically separated nodes.

- Spread the replica vBucket as wide as possible among other member nodes.

- Minimize the amount of data migration

- Orchestrate the steps of replica redistribution so no node or network will be overwhelmed by the replica migration.

Once the vBucket maps is determined, the leader will pass the redistribution map to each member in the cluster and coordinate the steps of vBucket migration. The actual data transfer happens directly between the origination node to the destination node.

Notice that since we have generally more vBuckets than machines. The workload of migration will be evenly distributed automatically. For example, when new machines are added into the clusters, all existing machines will migrate some portion of its vBucket to the new machines. There is no single bottleneck in the cluster.

Throughput the migration and redistribution of vBucket among servers, the life cycle of a vBucket in a server will be in one of the following states

- “Active”: means the server is hosting the vBucket is ready to handle both read and write request

- “Replica”: means the server is hosting the a copy of the vBucket that may be slightly out of date but can take read request that can tolerate some degree of outdate.

- “Pending”: means the server is hosting a copy that is in a critical transitional state. The server cannot take either read or write request at this moment.

- “Dead”: means the server is no longer responsible for the vBucket and will not take either read or write request anymore.

Data Server

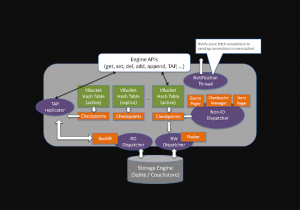

Data server implements the memcached APIs such as get, set, delete, append, prepend, etc. It contains the following key datastructure:

- One in-memory hashtable (key by doc id) for the corresponding vBucket hosted. The hashtable acts as both a metadata for all documents as well as a cache for the document content. Maintain the entry gives a quick way to detect whether the document exists on disk.

- To support async write, there is a checkpoint linkedlist per vBucket holding the doc id of modified documents that hasn’t been flushed to disk or replicated to the replica.

To handle a “GET” request

- Data server routes the request to the corresponding ep-engine responsible for the vBucket.

- The ep-engine will lookup the document id from the in-memory hastable. If the document content is found in cache (stored in the value of the hashtable), it will be returned. Otherwise, a background disk fetch task will be created and queued into the RO dispatcher queue.

- The RO dispatcher then reads the value from the underlying storage engine and populates the corresponding entry in the vbucket hash table.

- Finally, the notification thread notifies the disk fetch completion to the memcached pending connection, so that the memcached worker thread can revisit the engine to process a get request.

To handle a “SET” request, a success response will be returned to the calling client once the updated document has been put into the in-memory hashtable with a write request put into the checkpoint buffer. Later on the Flusher thread will pickup the outstanding write request from each checkpoint buffer, lookup the corresponding document content from the hashtable and write it out to the storage engine.

Of course, data can be lost if the server crashes before the data has been replicated to another server and/or persisted. If the client requires a high data availability across different crashes, it can issue a subsequent observe() call which blocks on the condition that the server persist data on disk, or the server has replicated the data to another server (and get its ACK). Overall speaking, the client has various options to tradeoff data integrity with throughput.

Hashtable Management

To synchronize accesses to a vbucket hash table, each incoming thread needs to acquire a lock before accessing a key region of the hash table. There are multiple locks per vbucket hash table, each of which is responsible for controlling exclusive accesses to a certain ket region on that hash table. The number of regions of a hash table can grow dynamically as more documents are inserted into the hash table.

To control the memory size of the hashtable, Item pager thread will monitor the memory utilization of the hashtable. Once a high watermark is reached, it will initiate an eviction process to remove certain document content from the hashtable. Only entries that is not referenced by entries in the checkpoint buffer can be evicted because otherwise the outstanding update (which only exists in hashtable but not persisted) will be lost.

After eviction, the entry of the document still remains in the hashtable; only the document content of the document will be removed from memory but the metadata is still there. The eviction process stops after reaching the low watermark. The high / low water mark is determined by the bucket memory quota. By default, the high water mark is set to 75% of bucket quota, while the low water mark is set to 60% of bucket quota. These water marks can be configurable at runtime.

In CouchDb, every document is associated with an expiration time and will be deleted once it is expired. Expiry pager is responsible for tracking and removing expired document from both the hashtable as well as the storage engine (by scheduling a delete operation).

Checkpoint Manager

Checkpoint manager is responsible to recycle the checkpoint buffer, which holds the outstanding update request, consumed by the two downstream processes, Flusher and TAP replicator. When all the request in the checkpoint buffer has been processed, the checkpoint buffer will be deleted and a new one will be created.

TAP Replicator

TAP replicator is responsible to handle vBucket migration as well as vBucket replication from active server to replica server. It does this by propagating the latest modified document to the corresponding replica server.

At the time a replica vBucket is established, the entire vBucket need to be copied from the active server to the empty destination replica server as follows

- The in-memory hashtable at the active server will be transferred to the replica server. Notice that during this period, some data may be updated and therefore the data set transfered to the replica can be inconsistent (some are the latest and some are outdated).

- Nevertheless, all updates happen after the start of transfer is tracked in the checkpoint buffer.

- Therefore, after the in-memory hashtable transferred is completed, the TAP replicator can pickup those updates from the checkpoint buffer. This ensures the latest versioned of changed documents are sent to the replica, and hence fix the inconsistency.

- However the hashtable cache doesn’t contain all the document content. Data also need to be read from the vBucket file and send to the replica. Notice that during this period, update of vBucket will happen in active server. However, since the file is appended only, subsequent data update won’t interfere the vBucket copying process.

After the replica server has caught up, subsequent update at the active server will be available at its checkpoint buffer which will be pickup by the TAP replicator and send to the replica server.

CouchDB Storage Structure

Data server defines an interface where different storage structure can be plugged-in. Currently it supports both a SQLite DB as well as CouchDB. Here we describe the details of CouchDb, which provides a super high performance storage mechanism underneath the Couchbase technology.

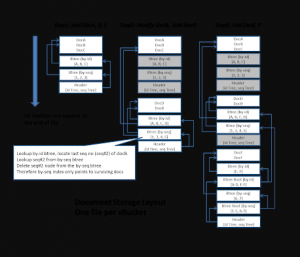

Under the CouchDB structure, there will be one file per vBucket. Data are written to this file in an append-only manner, which enables Couchbase to do mostly sequential writes for update, and provide the most optimized access patterns for disk I/O. This unique storage structure attributes to Couchbase’s fast on-disk performance for write-intensive applications.

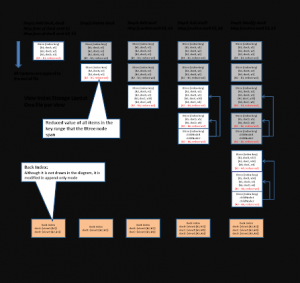

The following diagram illustrate the storage model and how it is modified by 3 batch updates (notice that since updates are asynchronous, it is perform by “Flusher” thread in batches).

The Flusher thread works as follows:

1) Pick up all pending write request from the dirty queue and de-duplicate multiple update request to the same document.

2) Sort each request (by key) into corresponding vBucket and open the corresponding file

3) Append the following into the vBucket file (in the following contiguous sequence)

- All document contents in such write request batch. Each document will be written as [length, crc, content] one after one sequentially.

- The index that stores the mapping from document id to the document’s position on disk (called the BTree by-id)

- The index that stores the mapping from update sequence number to the document’s position on disk. (called the BTree by-seq)

The by-id index plays an important role for looking up the document by its id. It is organized as a B-Tree where each node contains a key range. To lookup a document by id, we just need to start from the header (which is the end of the file), transfer to the root BTree node of the by-id index, and then further traverse to the leaf BTree node that contains the pointer to the actual document position on disk.

During the write, the similar mechanism is used to trace back to the corresponding BTree node that contains the id of the modified documents. Notice that in the append-only model, update is not happening in-place, instead we located the existing location and copy it over by appending. In other words, the modified BTree node will be need to be copied over and modified and finally paste to the end of file, and then its parent need to be modified to point to the new location, which triggers the parents to be copied over and paste to the end of file. Same happens to its parents’ parent and eventually all the way to the root node of the BTree. The disk seek can be at the O(logN) complexity.

The by-seq index is used to keep track of the update sequence of lived documents and is used for asynchronous catchup purposes. When a document is created, modified or deleted, a sequence number is added to the by-seq btree and the previous seq node will be deleted. Therefore, for cross-site replication, view index update and compaction, we can quickly locate all the lived documents in the order of their update sequence. When a vBucket replicator asks for the list of update since a particular time, it provides the last sequence number in previous update, the system will then scan through the by-seq BTree node to locate all the document that has sequence number larger than that, which effectively includes all the document that has been modified since the last replication.

As time goes by, certain data becomes garbage (see the grey-out region above) and become unreachable in the file. Therefore, we need a garbage collection mechanism to clean up the garbage. To trigger this process, the by-id and by-seq B-Tree node will keep track of the data size of lived documents (those that is not garbage) under its substree. Therefore, by examining the root BTree node, we can determine the size of all lived documents within the vBucket. When the ratio of actual size and vBucket file size fall below a certain threshold, a compaction process will be triggered whose job is to open the vBucket file and copy the survived data to another file.

Technically, the compaction process opens the file and read the by-seq BTree at the end of the file. It traces the Btree all the way to the leaf node and copy the corresponding document content to the new file. The compaction process happens while the vBucket is being updated. However, since the file is appended only, new changes are recorded after the BTree root that the compaction has opened, so subsequent data update won’t interfere with the compaction process. When the compaction is completed, the system need to copy over the data that was appended since the beginning of the compaction to the new file.

View Index Structure

Unlike most indexing structure which provide a pointer from the search attribute back to the document. The CouchDb index (called View Index) is better perceived as a denormalized table with arbitrary keys and values loosely associated to the document.

Such denormalized table is defined by a user-provided map() and reduce() function.

map = function(doc) {

…

emit(k1, v1)

…

emit(k2, v2)

…

}

reduce = function(keys, values, isRereduce) {

if (isRereduce) {

// Do the re-reduce only on values (keys will be null)

} else {

// Do the reduce on keys and values

}

// result must be ready for input values to re-reduce

return result

}

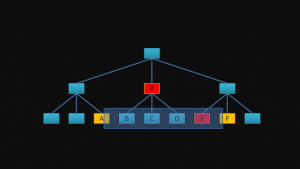

Whenever a document is created, updated, deleted, the corresponding map(doc) function will be invoked (in an asynchronous manner) to generate a set of key/value pairs. Such key/value will be stored in a B-Tree structure. All the key/values pairs of each B-Tree node will be passed into the reduce() function, which compute an aggregated value within that B-Tree node. Re-reduce also happens in non-leaf B-Tree nodes which further aggregate the aggregated value of child B-Tree nodes.

The management server maintains the view index and persisted it to a separate file.

Create a view index is perform by broadcast the index creation request to all machines in the cluster. The management process of each machine will read its active vBucket file and feed each surviving document to the Map function. The key/value pairs emitted by the Map function will be stored in a separated BTree index file. When writing out the BTree node, the reduce() function will be called with the list of all values in the tree node. Its return result represent a partially reduced value is attached to the BTree node.

The view index will be updated incrementally as documents are subsequently getting into the system. Periodically, the management process will open the vBucket file and scan all documents since the last sequence number. For each changed document since the last sync, it invokes the corresponding map function to determine the corresponding key/value into the BTree node. The BTree node will be split if appropriate.

Underlying, Couchbase use a back index to keep track of the document with the keys that it previously emitted. Later when the document is deleted, it can look up the back index to determine what those key are and remove them. In case the document is updated, the back index can also be examined; semantically a modification is equivalent to a delete followed by an insert.

The following diagram illustrates how the view index file will be incrementally updated via the append-only mechanism.

Query Processing

Query in Couchbase is made against the view index. A query is composed of the view name, a start key and end key. If the reduce() function isn’t defined, the query result will be the list of values sorted by the keys within the key range. In case the reduce() function is defined, the query result will be a single aggregated value of all keys within the key range.

If the view has no reduce() function defined, the query processing proceeds as follows:

- Client issue a query (with view, start/end key) to the management process of any server (unlike a key based lookup, there is no need to locate a specific server).

- The management process will broadcast the request to other management process on all servers (include itself) within the cluster.

- Each management process (after receiving the broadcast request) do a local search for value within the key range by traversing the BTree node of its view file, and start sending back the result (automatically sorted by the key) to the initial server.

- The initial server will merge the sorted result and stream them back to the client.

However, if the view has reduce() function defined, the query processing will involve computing a single aggregated value as follows:

- Client issue a query (with view, start/end key) to the management process of any server (unlike a key based lookup, there is no need to locate a specific server).

- The management process will broadcast the request to other management process on all servers (include itself) within the cluster.

- Each management process do a local reduce for value within the key range by traversing the BTree node of its view file to compute the reduce value of the key range. If the key range span across a BTree node, the pre-computed of the sub-range can be used. This way, the reduce function can reuse a lot of partially reduced values and doesn’t need to recomputed every value of the key range from scratch.

- The original server will do a final re-reduce() in all the return value from each other servers, and then passed back the final reduced value to the client.

To illustrate the re-reduce concept, lets say the query has its key range from A to F.

Instead of calling reduce([A,B,C,D,E,F]), the system recognize the BTree node that contains [B,C,D] has been pre-reduced and the result P is stored in the BTree node, so it only need to call reduce(A,P,E,F).

Update View Index as vBucket migrates

Since the view index is synchronized with the vBuckets in the same server, when the vBucket has migrated to a different server, the view index is no longer correct; those key/value that belong to a migrated vBucket should be discarded and the reduce value cannot be used anymore.

To keep track of the vBucket and key in the view index, each bTree node has a 1024-bitmask indicating all the vBuckets that is covered in the subtree (ie: it contains a key emitted from a document belonging to the vBucket). Such bit-mask is maintained whenever the bTree node is updated.

At the server-level, a global bitmask is used to indicate all the vBuckets that this server is responsible for.

In processing the query of the map-only view, before the key/value pair is returned, an extra check will be perform for each key/value pair to make sure its associated vBucket is what this server is responsible for.

When processing the query of a view that has a reduce() function, we cannot use the pre-computed reduce value if the bTree node contains a vBucket that the server is not responsible for. In this case, the bTree node’s bit mask is compared with the global bit mask. In case if they are not aligned, then the reduce value need to be recomputed.

Here is an example to illustrate this process

Couchbase is one of the popular NOSQL technology built on a solid technology foundation designed for high performance. In this post, we have examined a number of such key features:

- Load balancing between servers inside a cluster that can grow and shrink according to workload conditions. Data migration can be used to re-achieve workload balance.

- Asynchronous write provides lowest possible latency to client as it returns once the data is store in memory.

- Append-only update model pushes most update transaction into sequential disk access, hence provide extremely high throughput for write intensive applications.

- Automatic compaction ensures the data lay out on disk are kept optimized all the time.

- Map function can be used to pre-compute view index to enable query access. Summary data can be pre-aggregated using the reduce function. Overall, this cut down the workload of query processing dramatically.

Installing CouchDB in Windows

Download CouchDB

The official website for CouchDB is https://couchdb.apache.org. If you click the given link, you can get the home page of the CouchDB official website as shown below.

If you click on the download button that will lead to a page where download links of CouchDB in various formats are provided. The following snapshot illustrates the same.

Choose the download link for windows systems and select one of the provided mirrors to start your download.

Installing CouchDB

CouchDB will be downloaded to your system in the form of setup file named setup-couchdb-1.6.1_R16B02.exe. Run the setup file and proceed with the installation.

After installation, open built-in web interface of CouchDB by visiting the following link: http://127.0.0.1:5984/. If everything goes fine, this will give you a web page, which have the following output.

{ "couchdb":"Welcome","uuid":"c8d48ac61bb497f4692b346e0f400d60", "version":"1.6.1", "vendor":{ "version":"1.6.1","name":"The Apache Software Foundation" } }

You can interact with the CouchDB web interface by using the following url −

http://127.0.0.1:5984/_utils/

This shows you the index page of Futon, which is the web interface of CouchDB.

Installing CouchDB in Linux Systems

For many of the Linux flavored systems, they provide CouchDB internally. To install this CouchDB follow the instructions.

On Ubuntu and Debian you can use −

sudo aptitude install couchdb

On Gentoo Linux there is a CouchDB ebuild available −

sudo emerge couchdb

If your Linux system does not have CouchDB, follow the next section to install CouchDB and its dependencies.

Installing CouchDB Dependencies

Following is the list of dependencies that are to be installed to get CouchDB in your system−

- Erlang OTP

- ICU

- OpenSSL

- Mozilla SpiderMonkey

- GNU Make

- GNU Compiler Collection

- libcurl

- help2man

- Python for docs

- Python Sphinx

To install these dependencies, type the following commands in the terminal. Here we are using Centos 6.5 and the following commands will install the required softwares compatible to Centos 6.5.

$sudo yum install autoconf $sudo yum install autoconf-archive $sudo yum install automake $sudo yum install curl-devel $sudo yum install erlang-asn1 $sudo yum install erlang-erts $sudo yum install erlang-eunit $sudo yum install erlang-os_mon $sudo yum install erlang-xmerl $sudo yum install help2man $sudo yum install js-devel $sudo yum install libicu-devel $sudo yum install libtool $sudo yum install perl-Test-Harness

For all these commands you need to use sudo. The following procedure converts a normal user to a sudoer.

- Login as root in admin user

- Open sudo file using the following command −

visudo

- Then edit as shown below to give your existing user the sudoer privileges −

Hadoop All=(All) All , and press esc : x to write the changes to the file.

After downloading all the dependencies in your system, download CouchDB following the given instructions.

Downloading CouchDB

Apache software foundation will not provide the complete .tar file for CouchDB, so you have to install it from the source.

Create a new directory to install CouchDB, browse to such created directory and download CouchDB source by executing the following commands −

$ cd $ mkdir CouchDB $ cd CouchDB/ $ wget http://www.google.com/url?q=http%3A%2F%2Fwww.apache.org%2Fdist%2Fcouchdb%2Fsource%2F1.6.1%2Fapache-couchdb-1.6.1.tar.gz

This will download CouchDB source file into your system. Now unzip the apache-couchdb-1.6.1.tar.gz as shown below.

$ tar zxvf apache-couchdb-1.6.1.tar.gz

Configuring CouchDB

To configure CouchDB, do the following −

- Browse to the home folder of CouchDB.

- Login as superuser.

- Configure using ./configure prompt as shown below −

$ cd apache-couchdb-1.6.1 $ su Password: # ./configure --with-erlang=/usr/lib64/erlang/usr/include/

It gives you the following output similar to that shown below with a concluding line saying − You have configured Apache CouchDB, time to relax.

# ./configure --with-erlang=/usr/lib64/erlang/usr/include/ checking for a BSD-compatible install... /usr/bin/install -c checking whether build environment is sane... yes checking for a thread-safe mkdir -p... /bin/mkdir -p checking for gawk... gawk checking whether make sets $(MAKE)... yes checking how to create a ustar tar archive... gnutar ……………………………………………………….. ………………………. config.status: creating var/Makefile config.status: creating config.h config.status: config.h is unchanged config.status: creating src/snappy/google-snappy/config.h config.status: src/snappy/google-snappy/config.h is unchanged config.status: executing depfiles commands config.status: executing libtool commands You have configured Apache CouchDB, time to relax. Run `make && sudo make install' to install.

Installing CouchDB

Now type the following command to install CouchDB in your system.

# make && sudo make install

It installs CouchDB in your system with a concluding line saying − You have installed Apache CouchDB, time to relax.

Starting CouchDB

To start CouchDB, browse to the CouchDB home folder and use the following command −

$ cd apache-couchdb-1.6.1 $ cd etc $ couchdb start

It starts CouchDB giving the following output: −

Apache CouchDB 1.6.1 (LogLevel=info) is starting. Apache CouchDB has started. Time to relax. [info] [lt;0.31.0gt;] Apache CouchDB has started on http://127.0.0.1:5984/ [info] [lt;0.112.0gt;] 127.0.0.1 - - GET / 200 [info] [lt;0.112.0gt;] 127.0.0.1 - - GET /favicon.ico 200

Verification

Since CouchDB is a web interface, try to type the following homepage url in the browser.

http://127.0.0.1:5984/

It produces the following output −

{

"couchdb":"Welcome",

"uuid":"8f0d59acd0e179f5e9f0075fa1f5e804",

"version":"1.6.1",

"vendor":{

"name":"The Apache Software Foundation",

"version":"1.6.1"

}

}

CouchDB – Creating a Database

Creating a Database using Futon

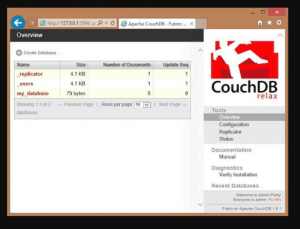

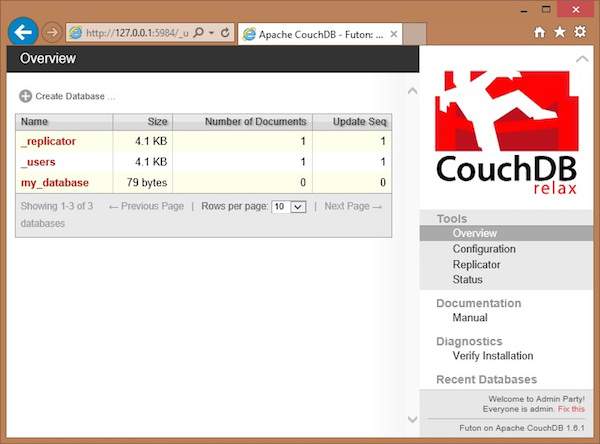

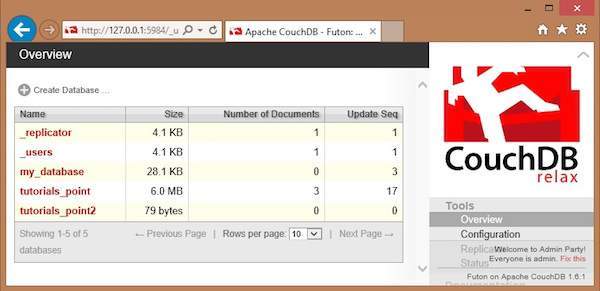

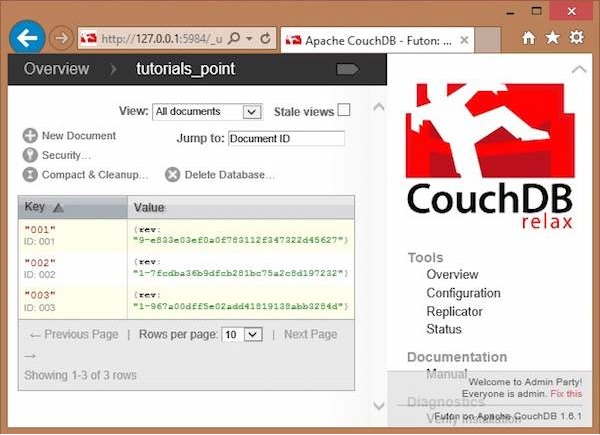

To create a database open the http://127.0.0.1:5984/_utils/. You will get an Overview/index page of CouchDB as shown below.

In this page, you can see the list of databases in CouchDB, an option button Create Database on the left hand side.

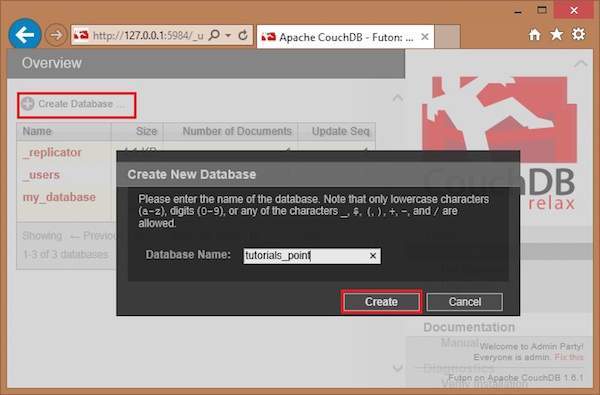

Now click on the create database link. You can see a popup window Create New Databases asking for the database name for the new database. Choose any name following the mentioned criteria. Here we are creating another database with name tutorials_point. Click on the create button as shown in the following screenshot.

CouchDB – Deleting a Database

Deleting a Database using Futon

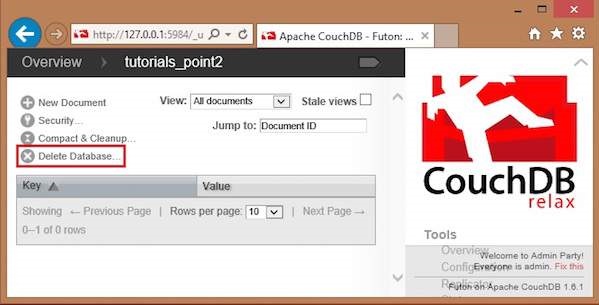

To delete a database, open the http://127.0.0.1:5984/_utils/ url where you will get an Overview/index page of CouchDB as shown below.

Here you can see three user created databases. Let us delete the database named tutorials_point2. To delete a database, select one from the list of databases, and click on it, which will lead to the overview page of the selected database where you can see the various operations on databases. The following screenshot shows the same −

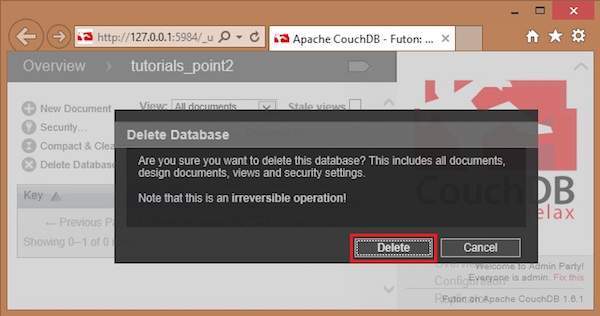

Among them you can find Delete Database option. By clicking on it you will get a popup window, asking whether you are sure! Click on delete, to delete the selected database.

CouchDB – Creating a Document

Creating a Document using Futon

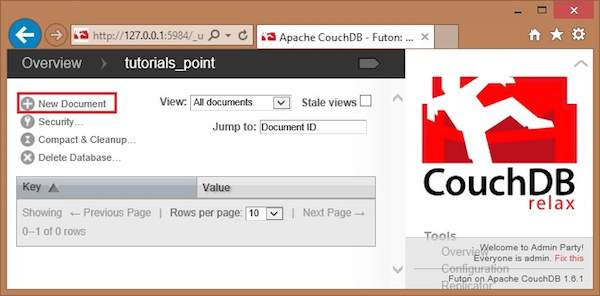

To Create a document open the http://127.0.0.1:5984/_utils/ url to get an Overview/index page of CouchDB as shown below.

Select the database in which you want to create the document. Open the Overview page of the database and select New Document option as shown below.

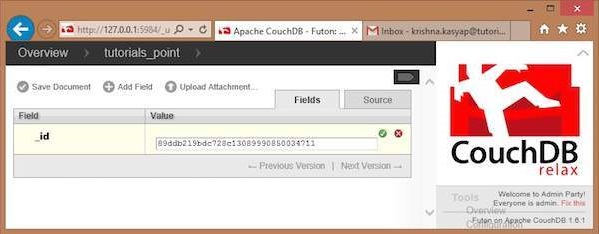

When you select the New Document option, CouchDB creates a new database document, assigning it a new id. You can edit the value of the id and can assign your own value in the form of a string. In the following illustration, we have created a new document with an id 001.

In this page, you can observe three options − save Document, Add Field and Upload Attachment.

Add Field to the Document

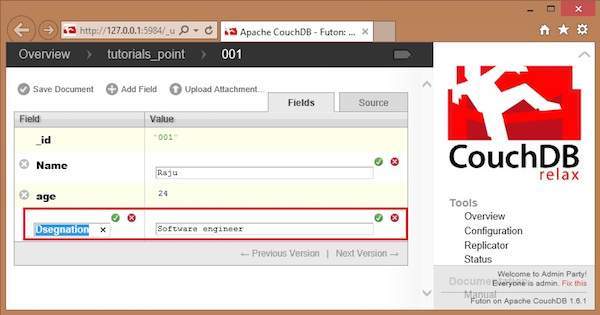

To add field to the document click on Add Field option. After creating a database, you can add a field to it using this option. Clicking on it will get you a pair of text boxes, namely, Field, value. You can edit these values by clicking on them. Edit those values and type your desired Field-Value pair. Click on the green button to save these values.

In the following illustration, we have created three fields Name, age and, Designation of the employee.

Save Document

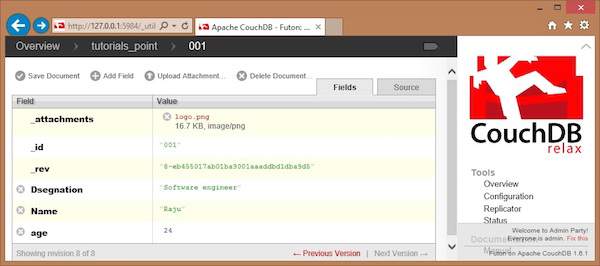

You can save the changes made to the document by clicking on this option. After saving, a new id _rev will be generated as shown below.

CouchDB – Updating a Document

Updating Documents using Futon

To delete a document open the http://127.0.0.1:5984/_utils/ url to get an Overview/index page of CouchDB as shown below.

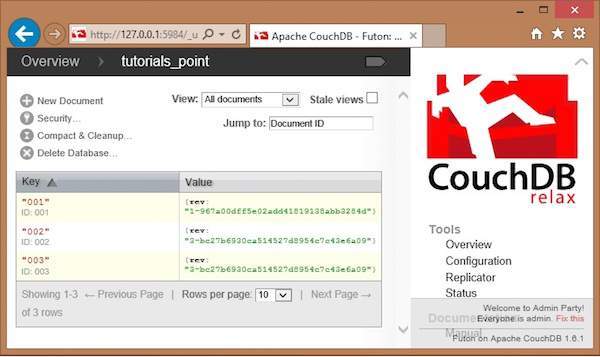

Select the database in which the document to be updated exists and click it. Here we are updating a document in the database named tutorials_point. You will get the list of documents in the database as shown below.

Select a document that you want to update and click on it. You will get the contents of the documents as shown below.

Here, to update the location from Delhi to Hyderabad, click on the text box, edit the field, and click the green button to save the changes as shown below.

CouchDB – Deleting a Document

Deleting a Document using Futon

First of all, verify the documents in the database. Following is the snapshot of the database named tutorials_point.

Here you can observe, the database consists of three documents. To delete any of the documents say 003, do the following −

- Click on the document, you will get a page showing the contents of selected document in the form of field-value pairs.

- This page also contains four options namely Save Document, Add Field, Upload Attachment, Delete Document.

- Click on Delete Document option.

- You will get a dialog box saying “Are you sure you want to delete this document?” Click on delete, to delete the document.

CouchDB – Attaching Files

Attaching Files using Futon

Upload Attachment

Using this option, you can upload a new attachment such as a file, image, or document, to the database. To do so, click on the Upload Attachment button. A dialog box will appear where you can choose the file to be uploaded. Select the file and click on the Upload button.

The file uploaded will be displayed under _attachments field. Later you can see the file by clicking on it.

Some of the significant advantages the Couchbase database holds over other databases:

Unsurpassed performance at a level: It can handle any volume, speed, or intensity of data. Applications run 10 to 20 times better on Couchbase.

Exceptional developer agility and flexibility:

Couchbase database has a 19% faster development circuiting and a 40% more rapid response. This enhanced application development agility is done by the use of a declarative query language, full-text search, and adaptive indexing capabilities: all these plus seamless data mobility.

-

Easy to manage.

Its smooth configuration and set up make use of multi-cloud agility and help ensure availability with five times reliability. Couchbase is so easy to maintain and has a 37% more efficient database management, 17% more efficient help desk support, and a 14% lower database licensing cost. The Couchbase database holds a competitive advantage over other databases in many different fields which include:

- Elastic architecture.

- Location and deployment are agnostic.

- Schema flexibility with SQL.

- Multi-dimensional scaling and workload isolation

- Built-in replication.

- It is globally distributed.

- It is always on edge to the cloud.

The Couchbase DB is already a shared database used by many businesses and companies to interact with their customers. Examples of these companies include Carrefour, AT&T, DreamWorks Animation, eBay, Wells Fargo, and many other big companies. It has many uses:

- It aggregates customers’ data and stores recommendations, session, user profiles, and history data under one service layer.

- It is used for catalog and inventory management for media and product recommendations.

- Has field service for asset tracking and work order management.

–Couchbase Server, originally known as Membase, is an open-source, distributed (shared-nothing architecture) multi-modelNoSQL document-oriented database software package that is optimized for interactive applications. These applications may serve many concurrent users by creating, storing, retrieving, aggregating, manipulating and presenting data. In support of these kinds of application needs, Couchbase Server is designed to provide easy-to-scale key-value or JSON document access with low latency and high sustained throughput. It is designed to be clustered from a single machine to very large-scale deployments spanning many machines.

Couchbase Server provides on-the-wire client protocol compatibility with memcached,[2] but is designed to add diskpersistence, data replication, live cluster reconfiguration, rebalancing and multitenancy with data partitioning.

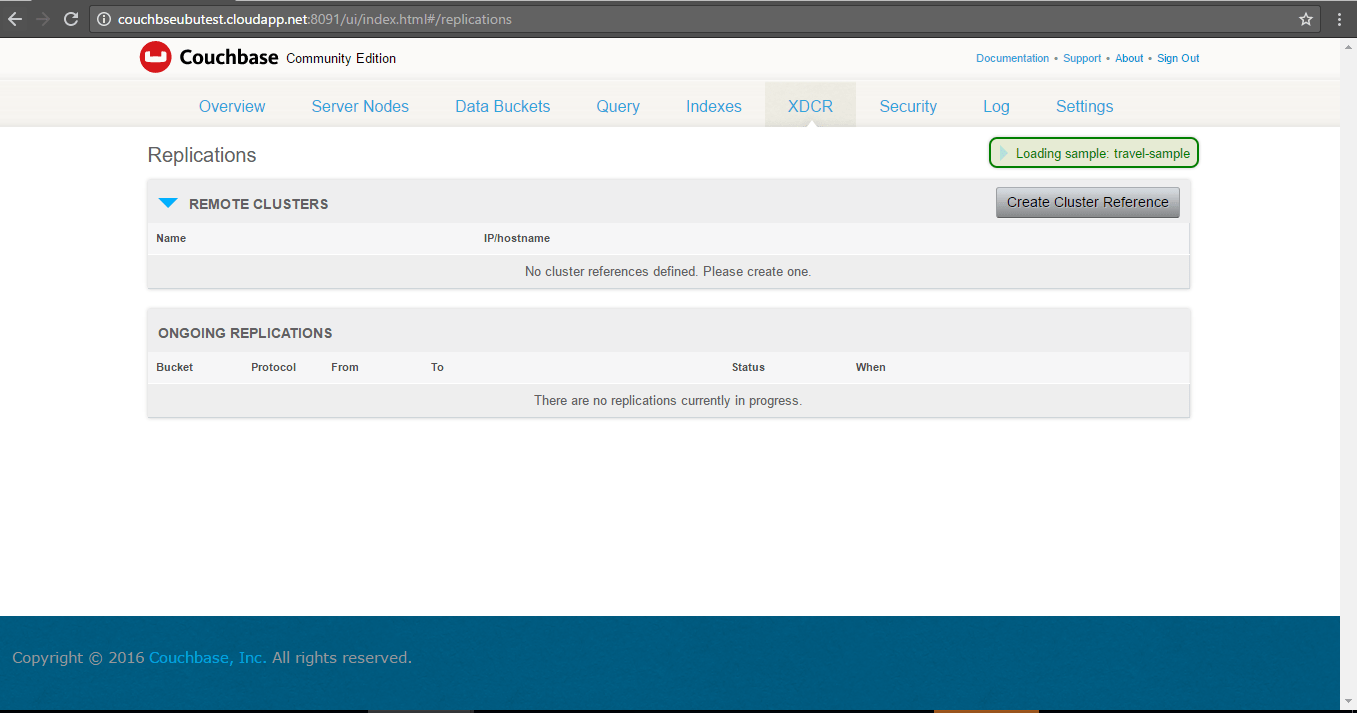

In the parlance of Eric Brewer’s CAP theorem, Couchbase is a CP type system meaning it provides consistency and partition tolerance. However Couchbase Server can be set up as an AP system with multiple clusters using XDCR (Cross Data Center Replication).

Couchbase Server is specialized to provide low-latency data management for large-scale interactive web, mobile, and IoT applications.

Couchbase Server On Cloud for Azure-Ubuntu

Features

Main Features of Couchbase :

Flexible data model

With Couchbase Server, JSON documents are used to represent application objects and the relationships between objects. This document model is flexible enough so that you can change application objects without having to migrate the database schema, or plan for significant application downtime. The other advantage of the flexible, document-based data model is that it is well suited to representing real-world items. JSON documents support nested structures, as well as fields representing relationships between items which enable you to realistically represent objects in your application.

Easy scalability

It is easy to scale with Couchbase Server, both within a cluster of servers and between clusters at different data centers. You can add additional instances of Couchbase Server to address additional users and growth in application data without any interruptions or changes in application code. With one click of a button, you can rapidly grow your cluster of Couchbase Servers to handle additional workload and keep data evenly distributed. Couchbase Server provides automatic sharding of data and rebalancing at run time; this lets you resize your server cluster on demand.

Easy developer integration

Couchbase provides client libraries for different programming languages such as Java / .NET / PHP / Ruby / C / Python / Node.jsFor read, Couchbase provides a key-based lookup mechanism where the client is expected to provide the key, and only the server hosting the data (with that key) will be contacted.

Couchbase also provides a query mechanism to retrieve data where the client provides a query (for example, a range based on some secondary key) as well as the view (basically the index). The query will be broadcast to all servers in the cluster and the result will be merged and sent back to the client.For write, Couchbase provides a key-based update mechanism where the client sends an updated document with the key (as doc id). When handling write request, the server will respond to client’s write request as soon as the data is stored in RAM on the active server, which offers the lowest latency for write requests.

Consistent high performance

Couchbase Server is designed for massively concurrent data use and consistently high throughput. It provides consistent sub-millisecond response times which help ensure an enjoyable experience for application users. By providing consistent, high data throughput, Couchbase Server enables you to support more users with fewer servers. Couchbase also automatically spreads the workload across all servers to maintain consistent performance and reduce bottlenecks at any given server in a cluster.

Reliable and secure

Couchbase support access control using username and passwords. The credentials are transmitted securely over the network. The sensitive data can be protected while it is transmitted to/from the client application.There is no single point of failure, since the data can be replicated across multiple nodes. Features such as cross-data center replication, failover, and backup and restore help ensure availability of data during server or datacenter failure.

Core Concepts

Couchbase as Document Store

The primary unit of data storage in Couchbase Server is a JSON document, which makes your application free ofrigidly-defined relational database tables. Because application objects are modeled as documents, schema migrations do not need to be performed.The binary data can be stored in documents as well, but using JSON structure allows the data to be indexed and queried using views. Couchbase Server provides a JavaScript-based query engine to find records based on field values.

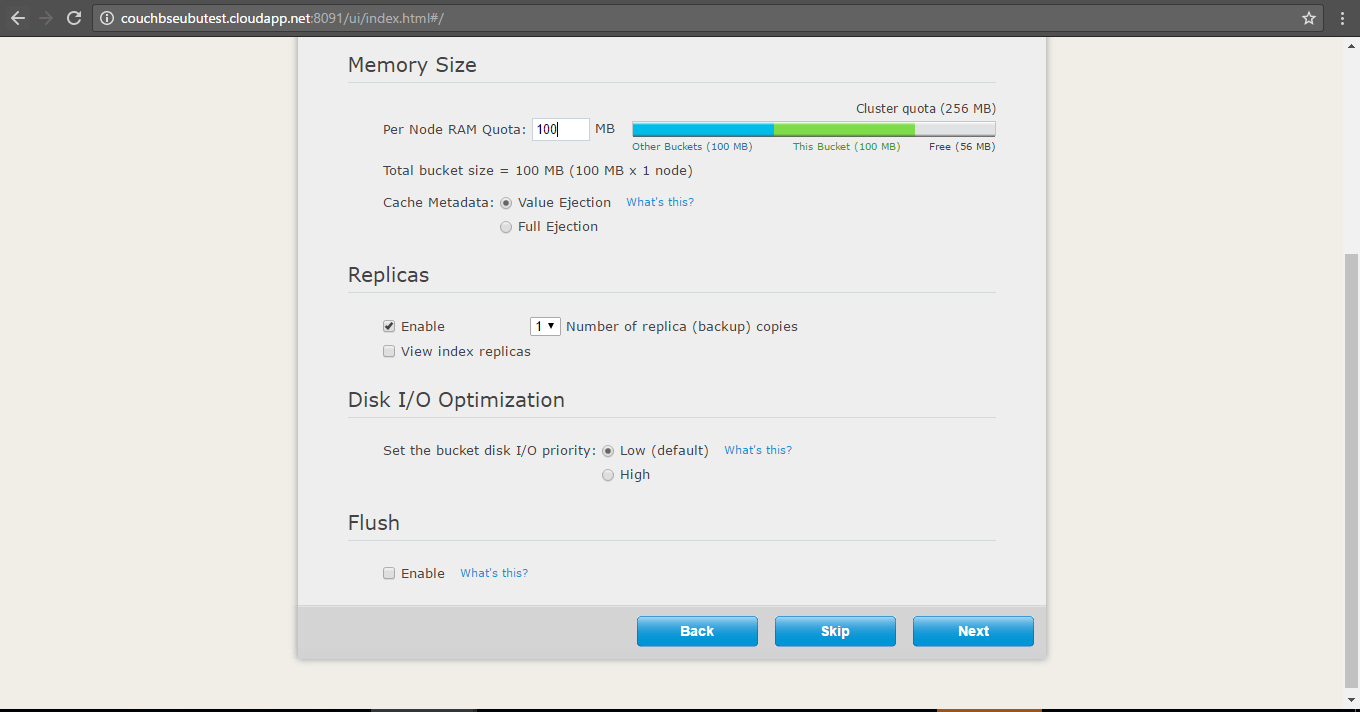

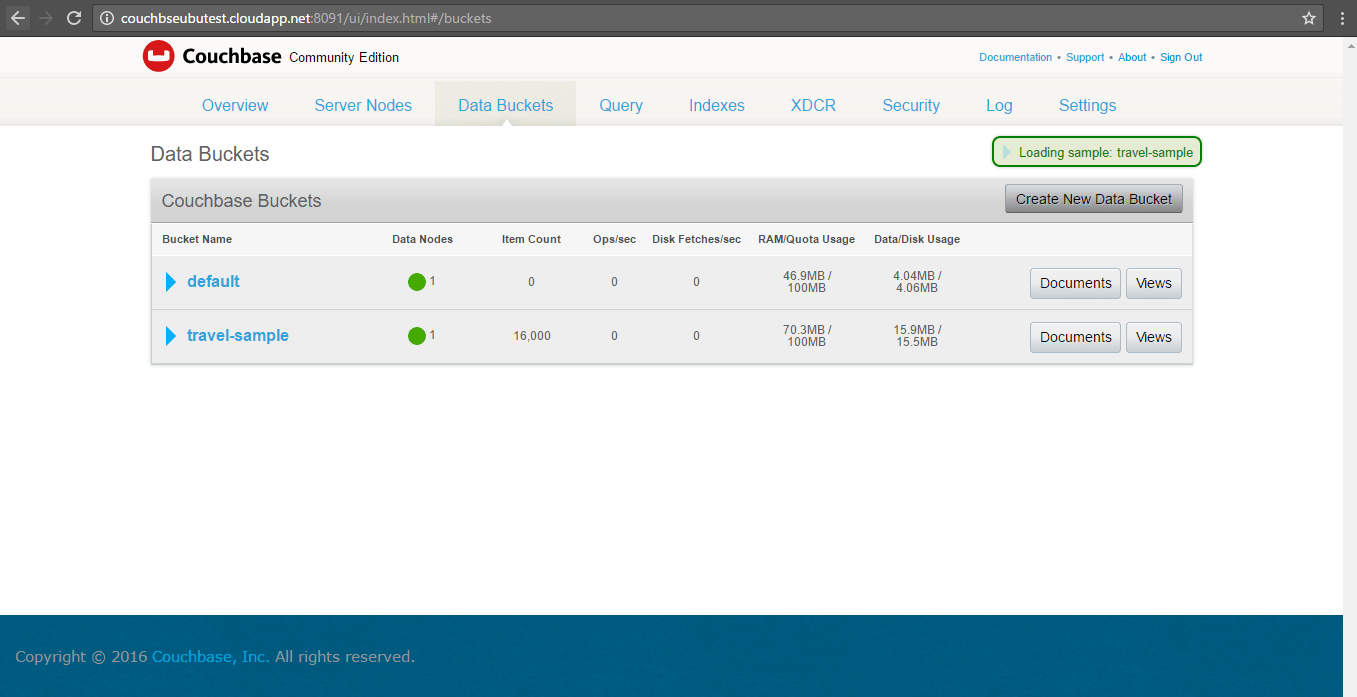

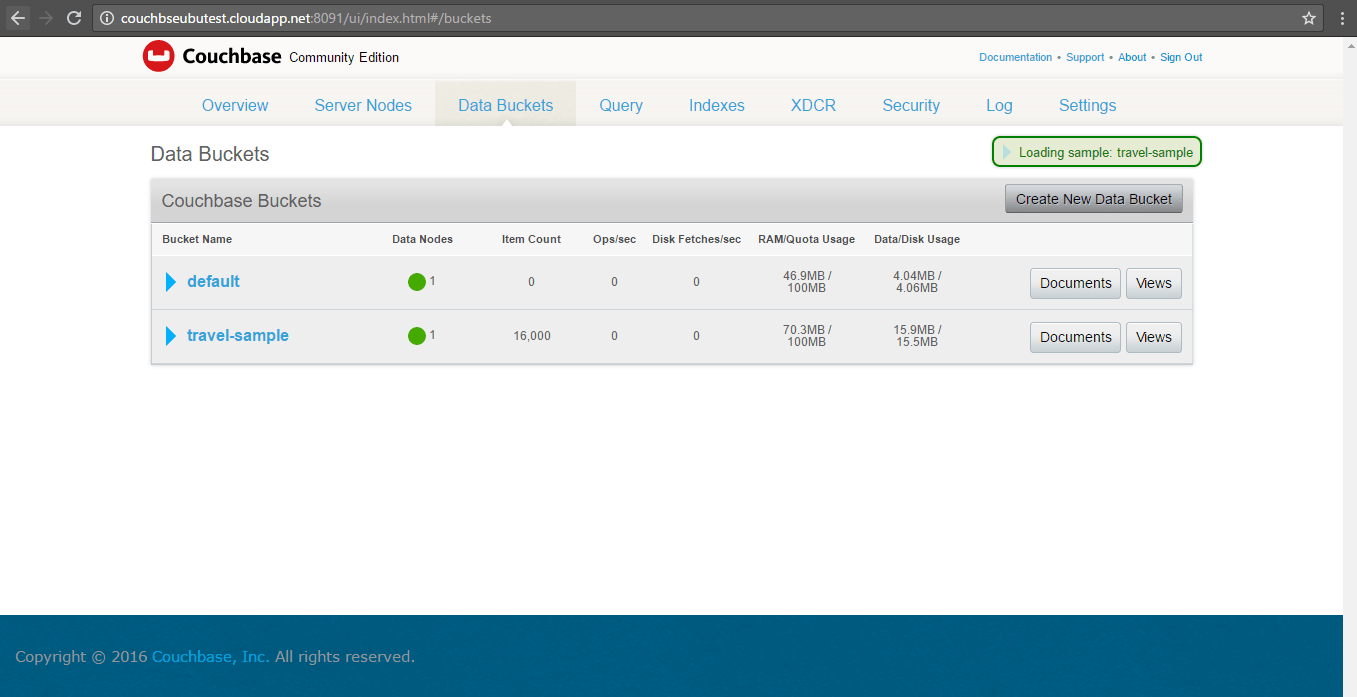

Data Buckets

The data is stored in a Couchbase cluster using buckets. Buckets are isolated, virtual containers which logically group records within a cluster. A bucket is the equivalent of a database. They provide a secure mechanism for organizing, managing and analyzing data storage.Documents are distributed uniformly across the cluster and stored in the buckets. Buckets provide a logical grouping of physical resources within a cluster. More specifically, it is possible to configure the memory for caching data or number of replicas per each bucket.

vBuckets

A vBucket is defined as the owner of a subset of the key space of a Couchbase cluster. The vBucket system is used both for distributing data across the cluster and for supporting replicas on more than one node.Every document ID belongs to a vBucket. A mapping function is used to calculate the vBucket to which a given document belongs. In Couchbase Server, that mapping function is a hashing function that takes a document ID as input and outputs a vBucket identifier. Once the vBucket identifier has been computed, a table is consulted to lookup the server that “hosts” that vBucket. The table contains one row per vBucket, pairing the vBucket to its hosting server. A server can be responsible for multiple vBuckets.

Keys and metadata

All information stored in Couchbase Server are documents with keys. Keys are unique identifiers of documents, and values can be either JSON documents or byte streams, data types, or other forms of serialized objects.Keys are also known as document IDs and serve the same function as a SQL primary keys. A key in Couchbase Server can be any string. A key is unique.By default, all documents contain three types of metadata. This information is stored with the document and is used to change how the document is handled:

- CAS Value – a form of basic optimistic concurrency.

- Time to Live (ttl) — expiration for a document (seconds)

- Flags – A variety of options during storage, retrieval, update, and removal of documents.

Couchbase SDK

Couchbase SDKs (aka client libraries) are the language-specific SDKs provided by Couchbase. A Couchbase SDK is responsible for communicating with the Couchbase Server and provides language-specific interfaces required to perform database operations.All Couchbase SDKs automatically read and write data to the right node in a cluster. If database topology changes, the SDK responds automatically and correctly distributes read/write requests to the right cluster nodes. Similarly, if your cluster experiences server failure, SDKs will automatically direct requests to still-functioning nodes.

Architectural Overview

Like other NOSQL technologies, Couchbase is built from the ground up on a highly distributed architecture, with data sharded across machines in a cluster.In a typical setting, a Couchbase DB resides in a server clusters involving multiple machines. Client library will connect to the appropriate servers to access the data.

To facilitate horizontal scaling, Couchbase uses hash sharding, which ensures that data is distributed uniformly across all nodes. The system defines 1,024 partitions (a fixed number), and once a document’s key is hashed into a specific partition, that’s where the document lives. In Couchbase Server, the key used for sharding is the document ID, a unique identifier automatically generated and attached to each document. Each partition is assigned to a specific node in the cluster. If nodes are added or removed, the system rebalances itself by migrating partitions from one node to another.

There is no single point of failure in a Couchbase system. All partition servers in a Couchbase cluster are equal, with each responsible for only that portion of the data that was assigned to it. Each server in a cluster runs two primary processes: a data manager and a cluster manager. The data manager handles the actual data in the partition, while the cluster manager deals primarily with intranode operations.

System resilience is enhanced by document replication. The cluster manager process coordinates the communication of replication data with remote nodes, and the data manager process supervise the replica data being assigned by cluster to the local node. Naturally, replica partitions are distributed throughout the cluster so that the replica copy of a partition is never on the same physical server as the active partition.

Documents are placed into buckets, and documents in one bucket are isolated from documents in other buckets from the perspective of indexing and querying operations. When a new bucket is created, it is possible to configure the number of replicas (up to three) for that bucket. If a server crashes, the system will detect the crash, locate the replicas of the documents that lived on the crashed system, and promote them to active status. The system maintains a cluster map, which defines the topology of the cluster, and this is updated in response to the crash.

Note that this scheme relies on thick clients embodied in the API libraries that applications use to communicate with Couchbase. Thick clients are in constant communication with server nodes. They fetch the updated cluster map, then reroute requests in response to the changed topology. In addition, they participate in load-balancing requests to the database. The work done to provide load balancing is actually distributed among the clients.

Changes in topology are coordinated by an orchestrator, which is a server node elected to be the single arbiter of cluster configuration changes. All topology changes are sent to all nodes in the cluster; even if the orchestrator node goes down, a new node can be elected to that position and system operation can continue uninterrupted.

Querying data

There are two patterns for querying data from Couchbase. The most efficient one is via key pattern. If the key of the document is known, the complexity of retrieval of the documents is O(1). It is also possible to retrieve multiple documents using multi-get. Using batch retrieval is very efficient when the client need to deal with a list of documents, because the number of client round-trip calls is reduced.

Another pattern of querying a Couchbase Server is performed via “views,” Couchbase terminology for indexes. Views are the mechanism used to query Couchbase data. To define a view, you build a specific kind of document called a design document which holds the JavaScript code that implements the map reduce operations that create the view’s index. Design documents are bound to specific buckets, which means that queries cannot execute across multiple buckets. Couchbase’s “eventual consistency” plays a role in views as well. If you add a new document to a bucket or update an existing document, the change may not be immediately visible.

Query parameters offer further filtering of an index. For example, you can use query parameters to define a query that returns a single entry or a specified range of entries from within an index.

Couchbase indexes are updated incrementally. That is, when an index is updated, it’s not reconstructed. Updates only involve those documents that have been changed or added or removed since the last time the index was updated. You can configure an index to be updated when specific circumstances occur. For example is possible to have the view updated based on a time interval or when a number of documents have updated.

Performance

Performance should be measured for meaningful workloads. This can help to understand performance characteristics for certain situations and choose the right NoSQL technology.

One of the benchmarks conducted to compare NoSQL technologies is called: YCSB (Yahoo Cloud Serving Benchmark). Its purpose is to focus on databases and on performance. It is open-source, extensible, has rich selection of connectors for various database technologies, it is reproducible and compares latency vs throughput.

The results showed that Couchbase has the lowest latencies and the highest throughput.

Performance and consistency

To ensure consistency, it is important to execute the read/write operations on the primary nodes only. The NoSQL solutions which have only one primary node are limited from the performance point of view, because clients cannot leverage secondary nodes. The first alternative is to execute read operations on all nodes (primary and secondary). In that case, read performance is better, but no longer consistent because replication is asynchronous by default. The second alternative is synchronous replication. Which leads to consistent data, but performance degradation.

Compared to the fist approach, Couchbase Server ensures data consistency, too. It also performs read/write operations on the primary nodes only to keep the data consistent. The only difference is that it leverage all the nodes, because every node is a primary node (for a subset of partitioned data).

All the read/write operations are performed on the primary nodes.

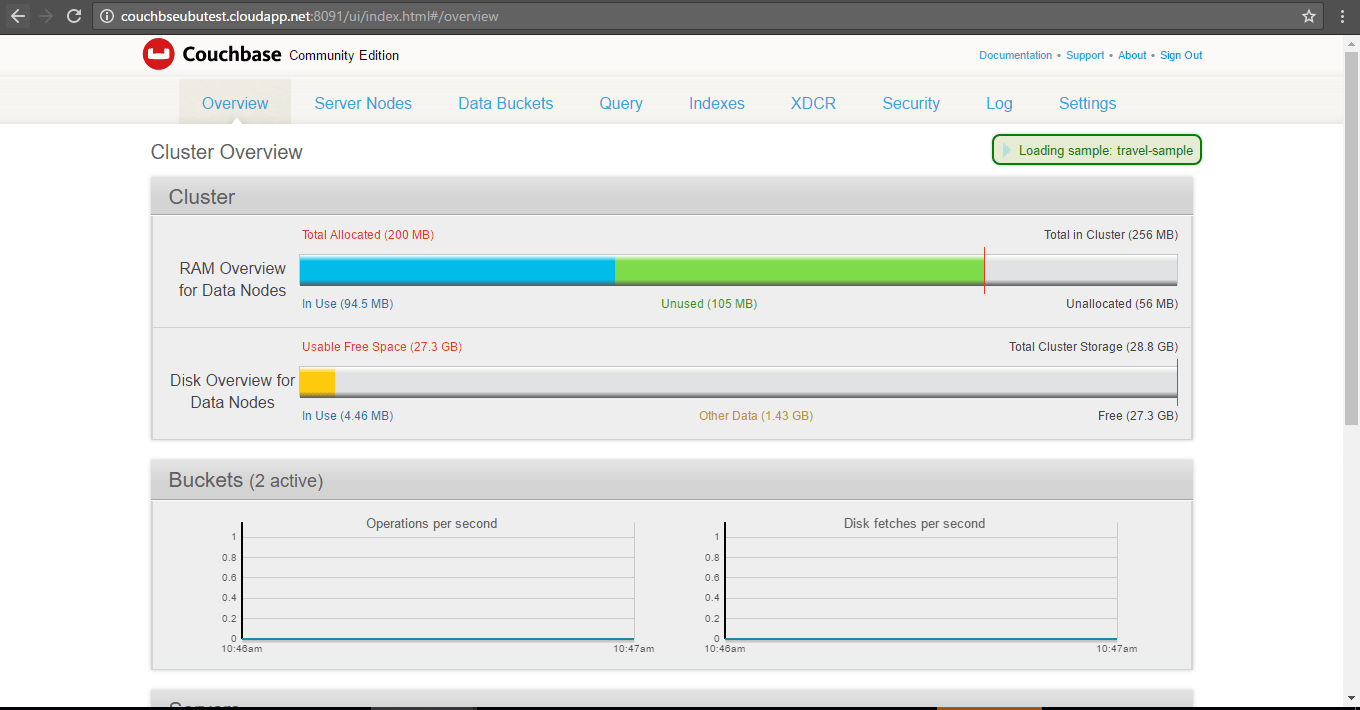

Monitoring

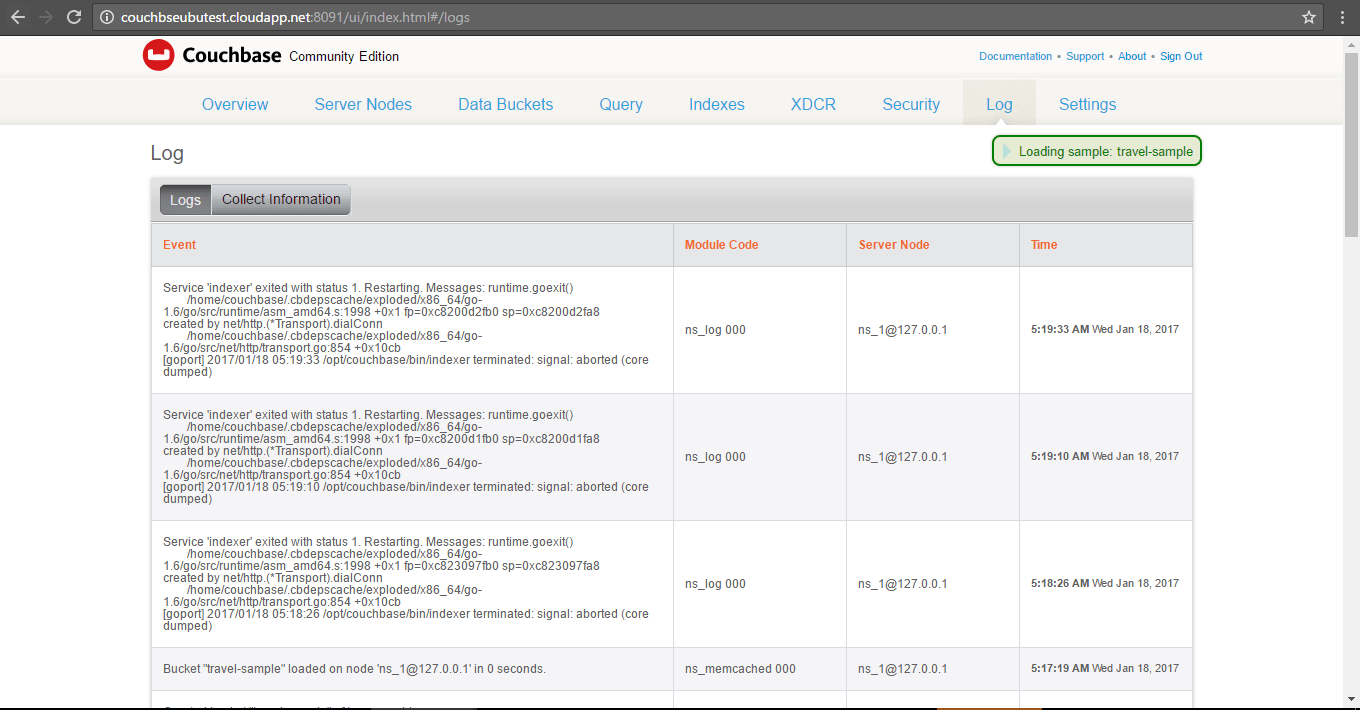

Couchbase Server incorporates a complete set of statistical and monitoring information. The statistics are provided through all of the administration interfaces. Within the Web Administration Console, a complete suite of statistics are provided, including built-in real-time graphing and performance data.

The statistics are divided into a number of groups, allowing you to identify different states and performance information within your cluster:

- By Node – Node statistics show CPU, RAM and I/O numbers on each of the servers and across your cluster as a whole.

- By vBucket – The vBucket statistics show the usage and performance numbers for the vBuckets used to store information in the cluster.

- By View – View statistics display information about individual views, including the CPU usage and disk space used so that you can monitor the effects and loading of a view on Couchbase nodes.

- By Disk Queues – monitor the queues used to read and write information to disk and between replicas. Can be helpful in determining whether the cluster should expand to reduce disk load.

Real World Use Cases

Real-time user activity

Consume a user activity feed from a messaging system (Kafka) and store activity metrics in Couchbase. The service is capable of answering, in real time, questions like: when the user was active last time? Has the user ever played a game? All queries can be executed very fast. Based on this kind of real-time answers, the application is capable of targeting various type of users for business-related flows.

Keep user preferences

Store various user preferences across all the web products in Couchbase. This is an alternative to keeping user data in HTTP sessions, cookies or relational databases.

Promotions Management and tracking

Management of promotions which attract customers to try various products based on predefined qualifying criteria. The customer promotion progress is tracked as well. The system is capable to identify in real-time if a customer has succesfully fulfilled a promotion and awards the configured reward.

-Major Features of Couchbase :

- Easier, Faster Development- Iterate faster by leveraging a flexible data model and a powerful query language to write less code and avoid database changes.

- Flexible Data Modeling-Add features by extending the data model to nest or reference data, or by adding new indexes and queries on the same data.

- Powerful Querying- Interact with data by sorting, filtering, grouping, combining, and transforming it with a query instead of complex application code.

- SQL Integration and Migration-Migrate relational data and queries as is, continue to leverage enterprise BI and reporting tools with full support for SQL.

- Big Data Integration- Integrate with Hadoop, Spark, and Kafka to enrich, distribute, and analyze operational data both offline and in real time.

- Mobile / IoT Extensions- Simplify mobile development by leveraging a cross-platform, embedded database with automatic synchronization to the cloud.

- Elastic Scalability- Scale easily, efficiently and reliably, from a few nodes to many, one data center to multiple, all with “push button” simplicity.

- Consistent High Performance- Build responsive applications and support millions of concurrent users by leveraging more memory and asynchronous operations.

- Always-on Availability- Maintain 24×365 uptime by enabling replication and automatic failover, and performing all maintenance operations online.

- Multi-Data Center Deployment- Operate in multiple geographies to improve performance and availability by configuring cross data center replication.

- Simple and Powerful Administration- Deploy, manage, and monitor deployments with an integrated admin UI and automated tasks optimized for large deployments.

AWS

Installation Instructions For Ubuntu

Note : 1. Please use “Couchbase” in the place of “stack_name”

2. How to find PublicDNS in AWS

A) SSH Connection: To connect to the operating system,

1) Download Putty.

2) Connect to virtual machine using following SSH credentials :

- Hostname: PublicDNS / IP of machine

- Port : 22

Username: To connect to the operating system, use SSH and the username is ubuntu.

Password : Please Click here to know how to get password .

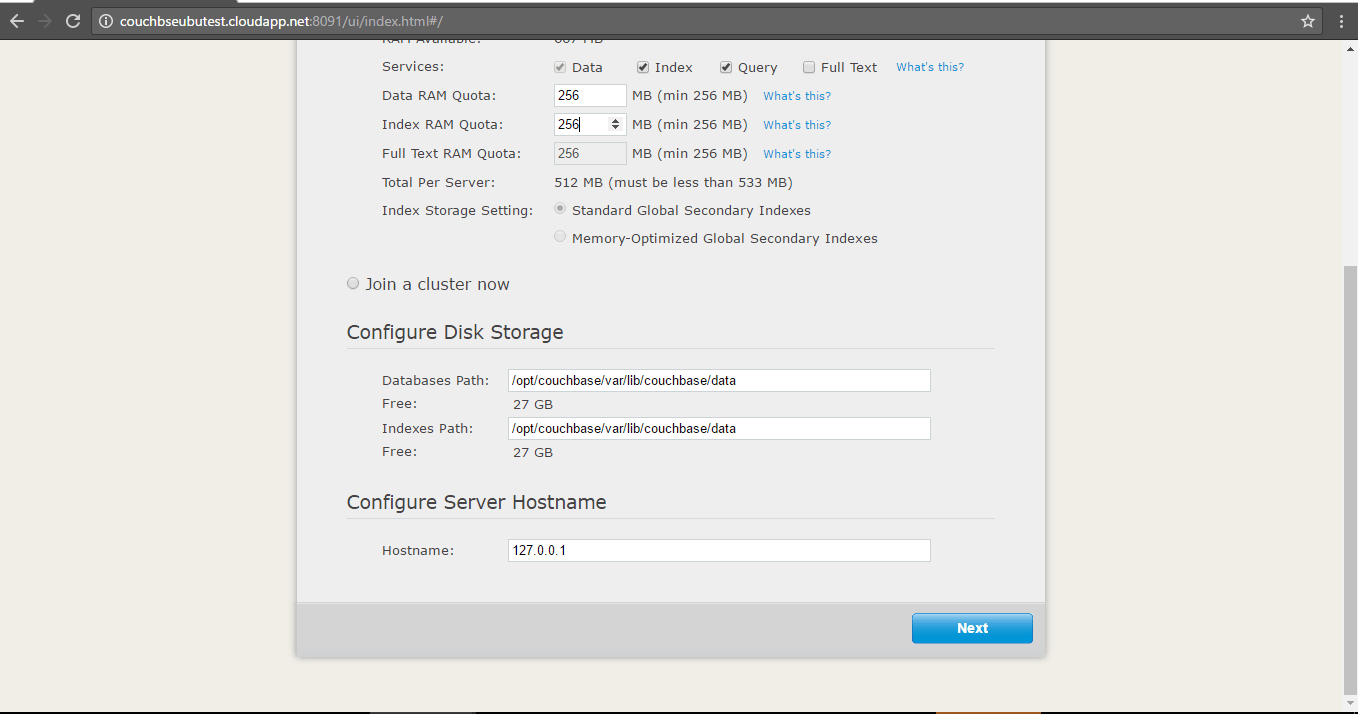

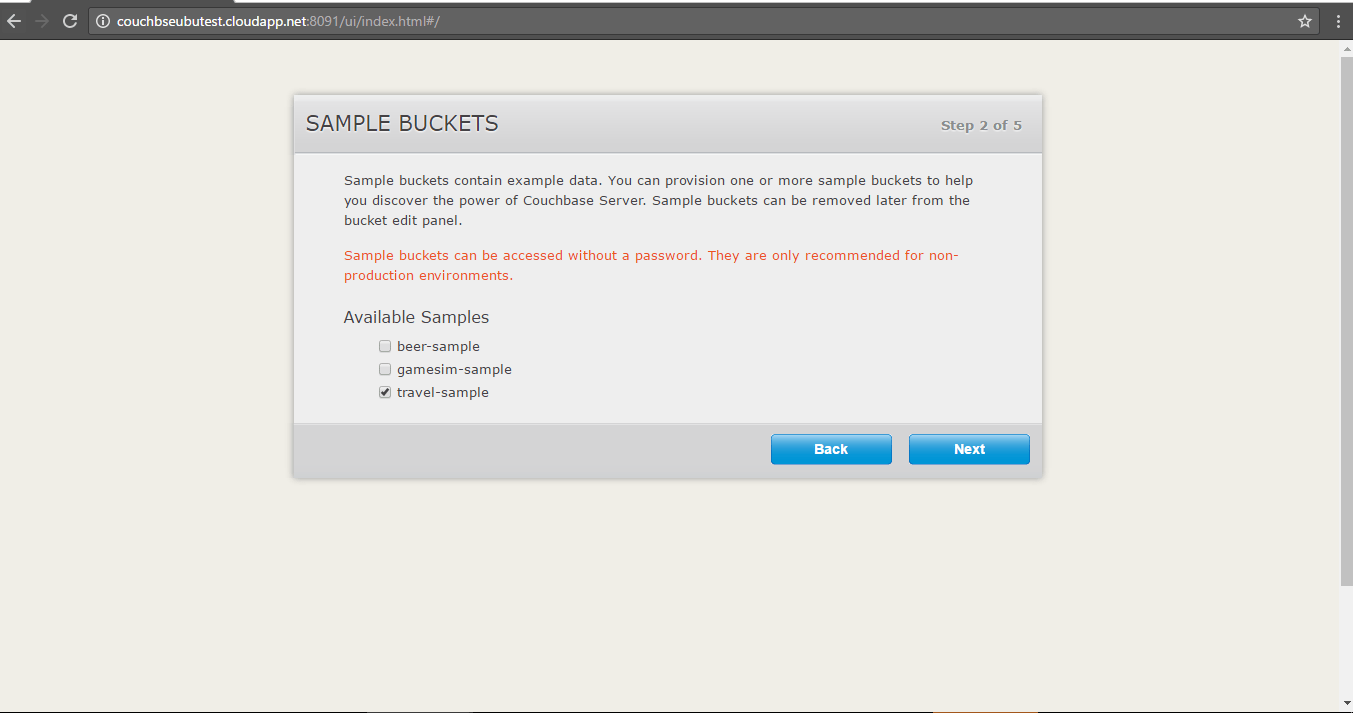

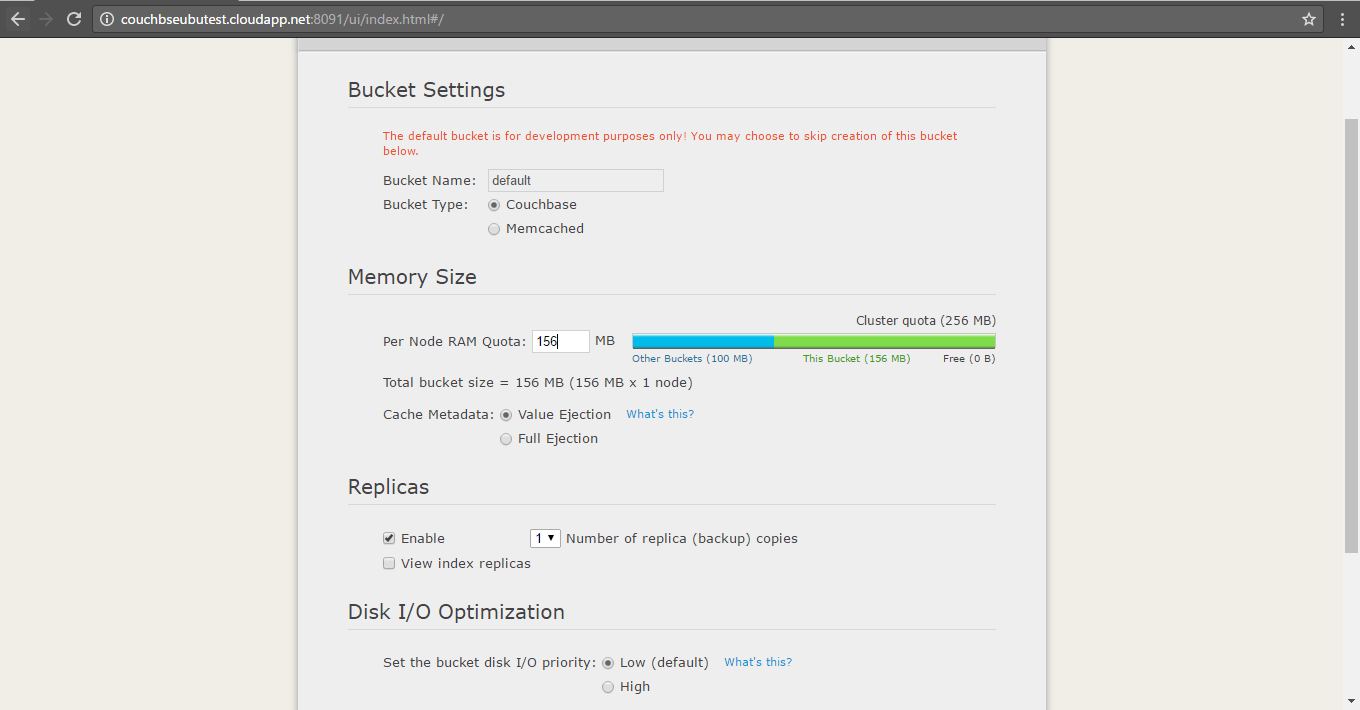

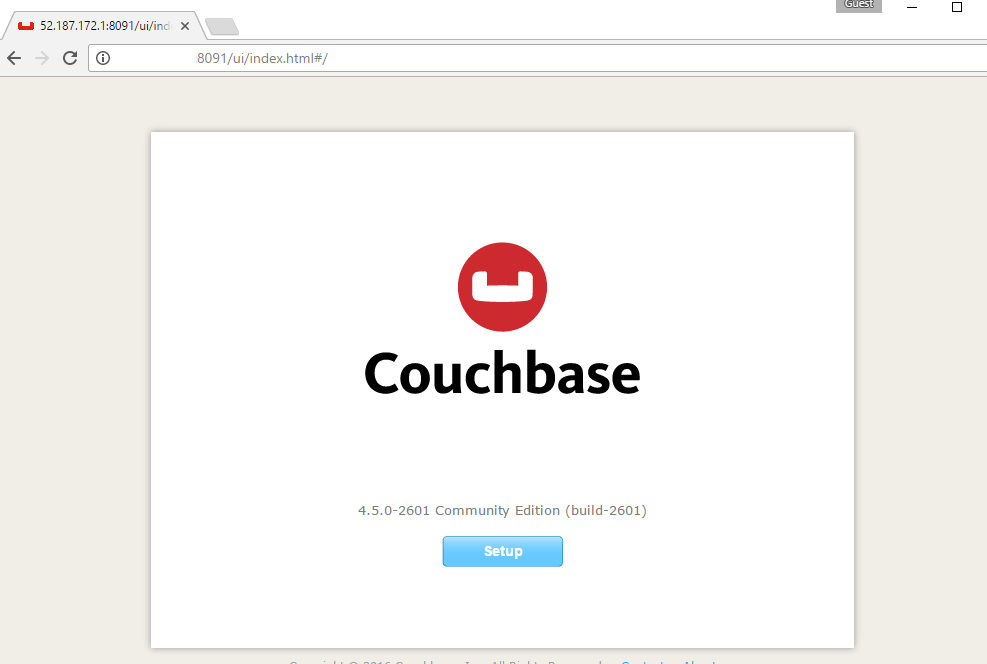

B) Application URL: Access the application via a browser at http://PublicDNS:8091

Note: Open port 8091 on server Firewall.

C) Other Information:

Default installation path: will be on your web root folder “/var/www/html/Couchbase” ( Please see above Note for stack name)

2.Default ports:

- Linux Machines: SSH Port – 22 or 2222

- Http: 80 or 8080

- Https: 443

- Sql or Mysql ports: By default these are not open on Public Endpoints. Internally Sql server: 1433. Mysql :3306

- Open port 8091 on server Firewall.

Configure custom inbound and outbound rules using this link

You can install Couchbase Server on Linux, Microsoft Windows, or OS X systems. For more installation information Please click here.

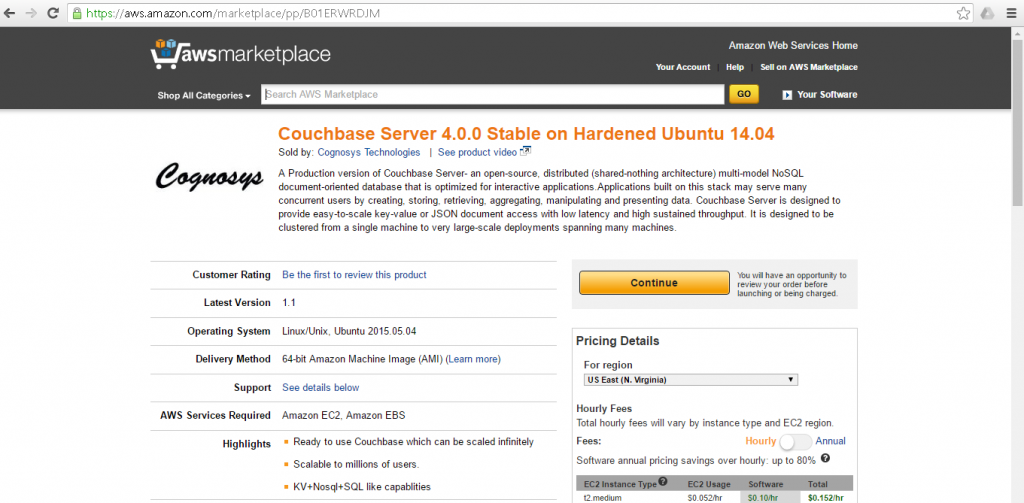

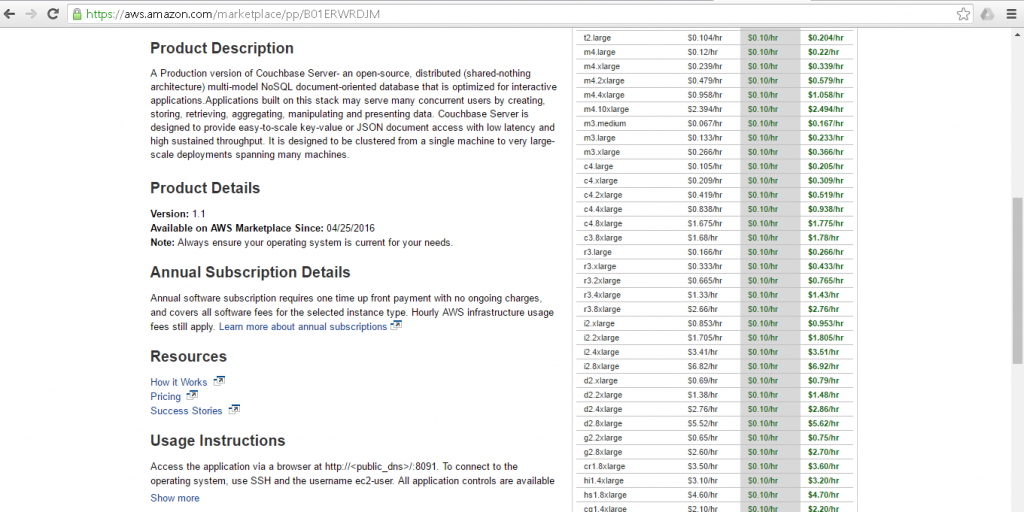

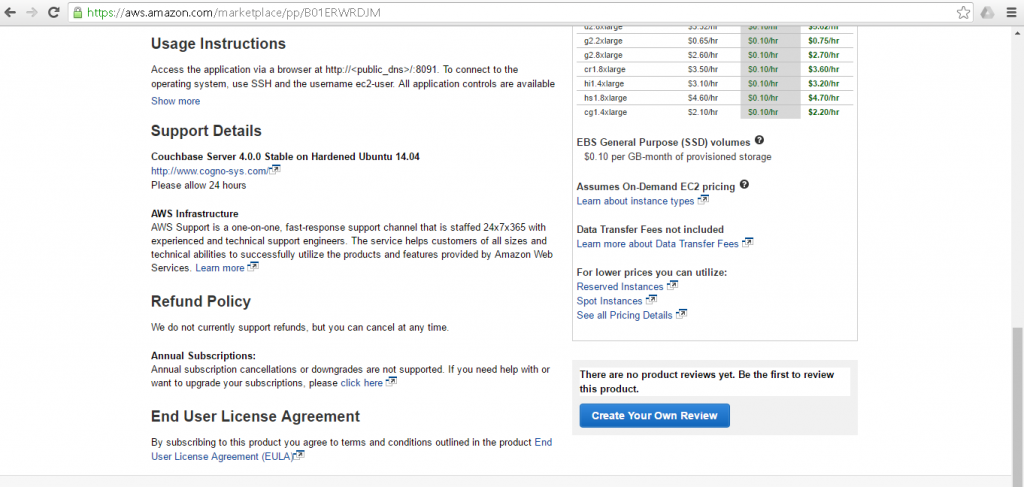

AWS Step by Step Screenshots

Product Overview – Couchbase Server

Azure

Installation Instructions For Ubuntu

Note : How to find PublicDNS in Azure

A) SSH Connection: To connect to the operating system,

1) Download Putty.

2) Connect to virtual machine using following SSH credentials:

- Hostname: PublicDNS / IP of machine

- Port : 22

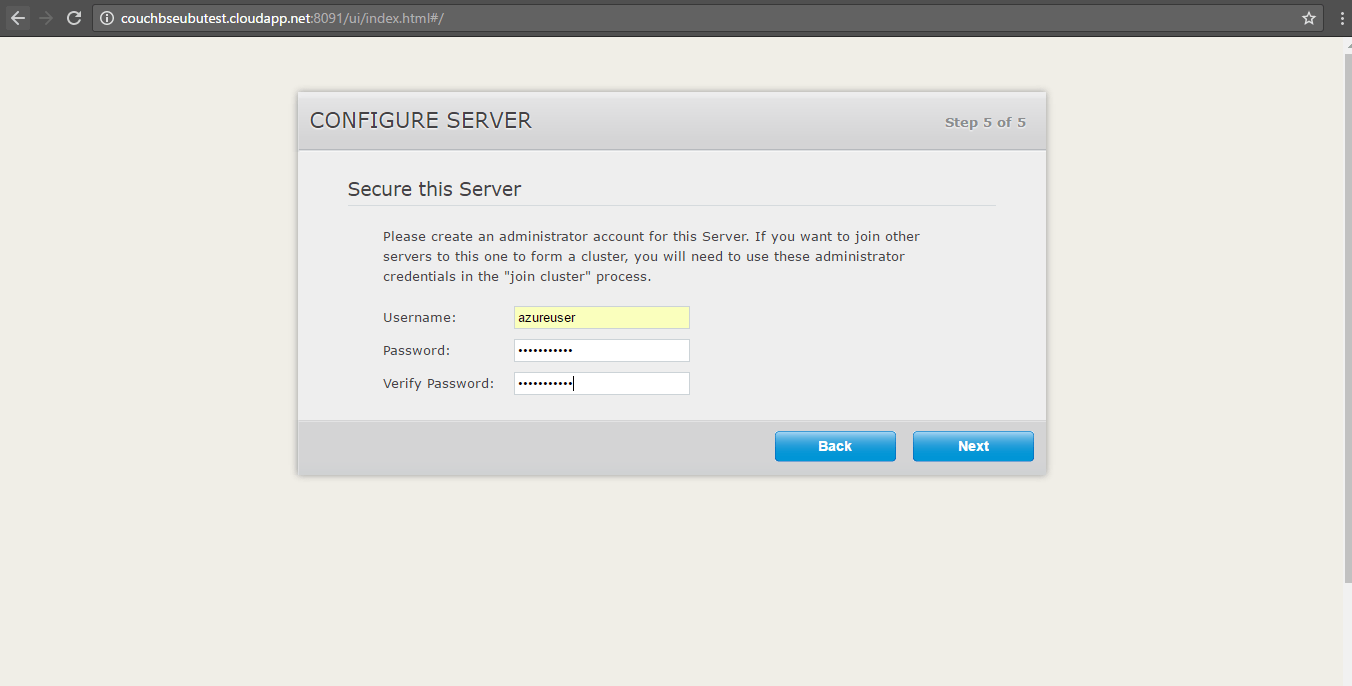

Username: Your chosen username when you created the machine ( For example: Azureuser)

Password : Your Chosen Password when you created the machine ( How to reset the password if you do not remember)

B) Other Information:

1.Default installation path: will be on your web root folder “/var/www/html/”

2. Allow 8091 in cloud firewall and Open a browser and navigate to “http://<Your IP>:8091/” for further Couchbase configuration.

3. To access Webmin interface for management please follow this link

Configure custom inbound and outbound rules using this link

Installation Instructions For Centos

Note : How to find PublicDNS in Azure

A) SSH Connection: To connect to the operating system,

1) Download Putty.

2) Connect to virtual machine using following SSH credentials :

- Hostname: PublicDNS / IP of machine

- Port : 22

Username: Your chosen username when you created the machine ( For example: Azureuser)

Password : Your Chosen Password when you created the machine ( How to reset the password if you do not remember)

B) Other Information:

1.Default installation path: will be on your web root folder “/var/www/html/”

2. Allow 8091 in cloud firewall and Open a browser and navigate to “http://<Your IP>:8091/” for further Couchbase configuration.

3. To access Webmin interface for management please follow this link

Configure custom inbound and outbound rules using this link

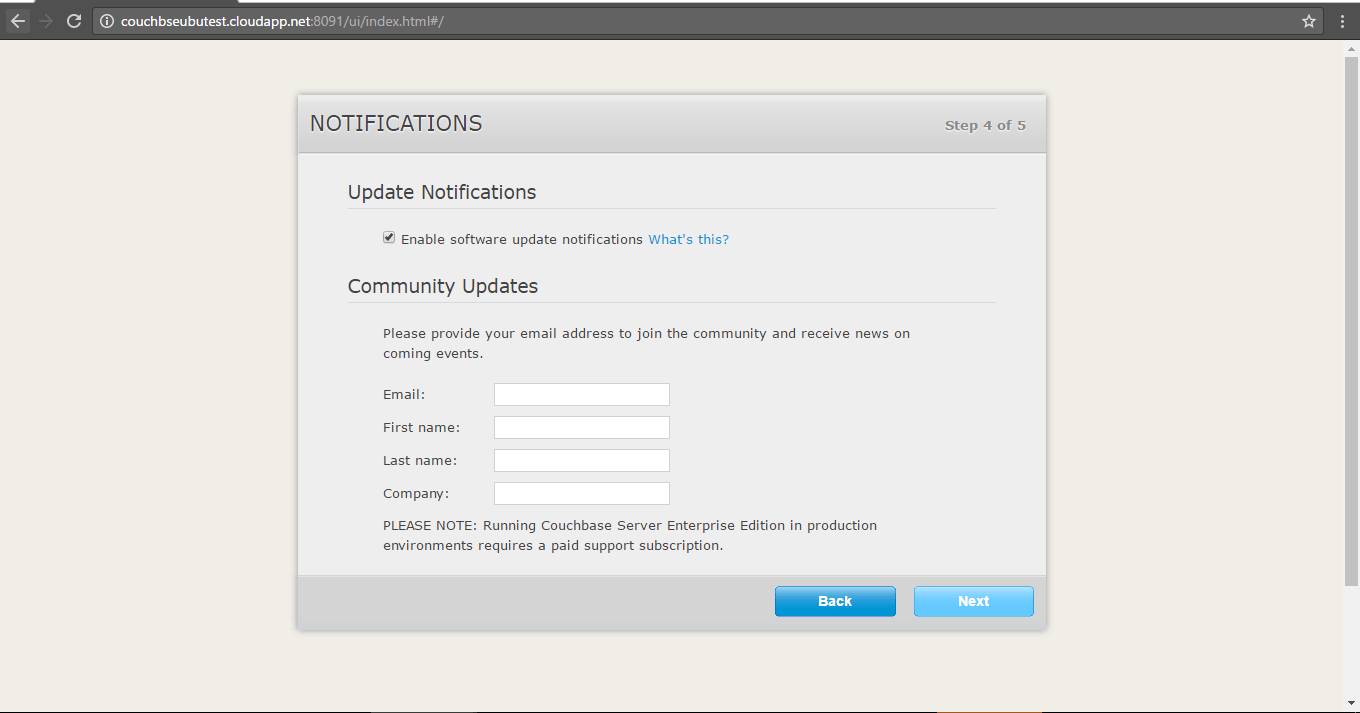

Azure Step by Step Screenshots

Videos

Introduction To Couchbase Server

Couchbase Server Architecture

Query Workbench Tool Overview in Couchbase 4.5